Guest post originally published on Medium by Lachlan Smith

Lachlan Smith from the ZeroFlucs Engineering team takes a dive into how we use strong identities, message authentication and encryption to ensure trusted, secure and contained communication in Kubernetes.

At ZeroFlucs we operate in a challenging world: our applications form part of the chain of trust that controls pricing for sportsbook operators, and as such we live with a target on our backs. At the same time, we run a modern stack — taking full advantage of micro-services, and we want to benefit from all the advantages that brings when building our applications to be scalable, modern and flexible to meet future requirements.

Trust and identity are important to us — they’re part of the secret sauce, especially as our environment grows more complex. We operate hundreds of services, with a small team and need to do so in a way that’s not just effective, but practical. This is especially true in a multi-tenant environment, such as our SaaS offering.

In this article, we’ll talk about some of the ways we secure our application — and how we’re leveraging Linkerd, the service mesh from Buoyant. The pincers of its mascot Linky just scream security.

Linkerd and the Data-Plane

At ZeroFlucs we are massive proponents of Linkerd, the CNCF service mesh. In fact, you can regularly find our founder Steve Gray involved with the Linkerd community — often lending other intrepid Linkerd users a hand to get started.

Unlike other service mesh offerings that crowd half baked features into the mix, or over-complicated topology choices, Linkerd follows the Unix model of doing one thing well, in a composable way.

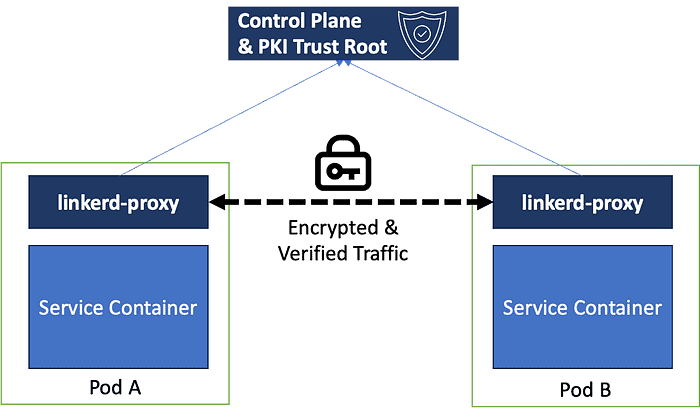

It’s a high-performance mesh, enabled by proxies running alongside your services as a sidecar container — collectively forming the data-plane of your application.

Having Linkerd under the hood also enables a bunch of extra-value adds for modern applications, one of these that we depend on is the highly effective GRPC load balancing and instrumentation. Early in our startup journey this gave us great insight into the health of the platform, increased reliability by automating retries of requests and — one of the big value-adds, and the focus today — security via mTLS.

mTLS is a security mechanism that provides end-to-end encryption and mutual authentication between services. mTLS is enabled by default by Linkerd which means all communication between services is encrypted and guarantees the authenticity of the communication taking place.

It also safeguards against all sorts of malicious attacks such as on-path attacks, spoofing and more.

If you want to learn more about Linkerd and service meshes in general, I’d highly recommend William Morgan’s (one of the creators of Linkerd) post “The Service Mesh”.

Omnipresent Mesh, Bearer of Identity

Our applications are deployed with Linkerd configured as a mandatory component, and will not start up without it being present and functional. This is the default when running Linkerd in “High Availability” configurations, and ensures that all pods receive a linkerd-proxy sidecar container.

This container is responsible for all communication in or out of the pod, and coordinates with the control-plane to obtain an identity for signing and verification, swapping the plaintext communication of the applications for mTLS.

This gives us two things of value:

- Security — We know that our traffic between pods cannot be intercepted by other pods, or at a network/physical level.

- Authentication — The mTLS certificates used to encrypt this traffic act as an identity. This can be used for restricting communication .

This has the added advantage that we can remove these concepts from our code — no certificate management at an application level to achieve end to end encryption.

None Shall Pass, Except Bob

Now that we’ve got an mTLS identity, let’s start using it. The first and most obvious way to implement this is to limit who can do what:

- Service A cannot talk to service B.

- Service B can only talk to service C.

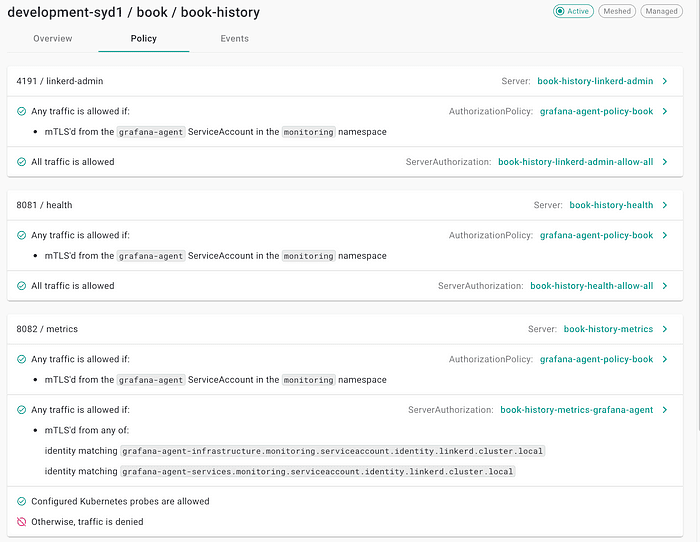

At ZeroFlucs, we’ve leveraged the configuration for Linkerd to deny-by-default for all services in all namespaces: nothing gets through unless its on the list.

In a tenanted environment such as ours, multiple tenants or customers share the same infrastructure while maintaining data separation. Ensuring that requests originate only from permitted sources becomes crucial to prevent commingling of customer data and malicious activities.

We have today, in excess of 600 rules per environment that permit the communication paths between components. You can’t collect so much as a metric from a pod without an explicit allow, and even then it’s only allowed to come from our Grafana/Prometheus agents.

Leveraging mTLS Identities at the Application Layer

Anyone who’s spent any time with Go and gRPC will be intimately familiar with the context package. It provides a type that is used to pass various data such as context deadlines, gRPC metadata, and other such values between services and processes.

Linkerd automatically injects the client identity as l5d-client-id in the gRPC metadata, which is found in the context of each request.

An example of a simple function to extract this data from the context is as follows:

func GetLinkerdIdentities(ctx context.Context) ([]string, error) {

meta, metaFound := metadata.FromIncomingContext(ctx)

if !metaFound || meta == nil {

return nil, fmt.Errorf("failed to extract context metadata")

}

ids := meta.Get("l5d-client-id")

return ids, nil

}This will return the set of identities attributed to the incoming request. The general form of this is:

service_a.namespace_b.serviceaccount.identity.linkerd.cluster.local

This means the request you saw came from Service A, in Namespace B in the local cluster. Now we’ve got that information in our hand, we can make some application level policy decisions:

- Is that service allowed to interact with the specific message queues, tenancies or other resources?

- Does the payload coming in have the required per-source metadata we expect?

There’s a wide range of things you can do with this, but there’s one important caveat: You can only trust this identity if you’ve got High Availability mode turned on and the proxy injector set to “Fail” the admission of pods to the cluster on error. Otherwise it’s possible to impersonate the header.

This has become a powerful tool for us, enabling us to have highly secure baseline permissions — and even within an application the permissions effective within the scope of an individual GRPC call can be varied, based on the callers identity. As we expand our multi-tenant architecture, this is something we find ourselves using more and more.

The Future and Gateway API

With the advances in Linkerd 2.13, and the evolution of standards via the Kubernetes GAMMA group — we expect that advances in the Kubernetes Gateway API will eventually mean we can remove more of these concepts from our code, however until then, we’re sitting a pretty good place.

Thanks for Reading

We’re happy today with our setup — using Linkerd to secure the backbone of our runtime environment, with its core features well positioned and easily leveraged in our Go & GRPC based application stack. It’s essentially become trivial for us to have robust security and verification in our stack.

If you have any questions, we’re happy to share our experience — you can comment here, LinkedIn or find us on the Linkerd Slack.